The Future of AI in Cybersecurity

n an era of relentless cyber threats and an increasingly stringent global privacy landscape, businesses face a critical challenge: How can they leverage vast amounts of data to build robust AI-powered defenses without violating customer trust and breaking the law?

The answer lies in a transformative technology paradigm: Federated Learning.

This guide explores how this decentralized AI approach, also known as collaborative AI, is revolutionizing cybersecurity. We will cover its key applications, its role in global compliance, and the real-world economic benefits for businesses.

What is Federated Learning? A Collaborative AI Approach

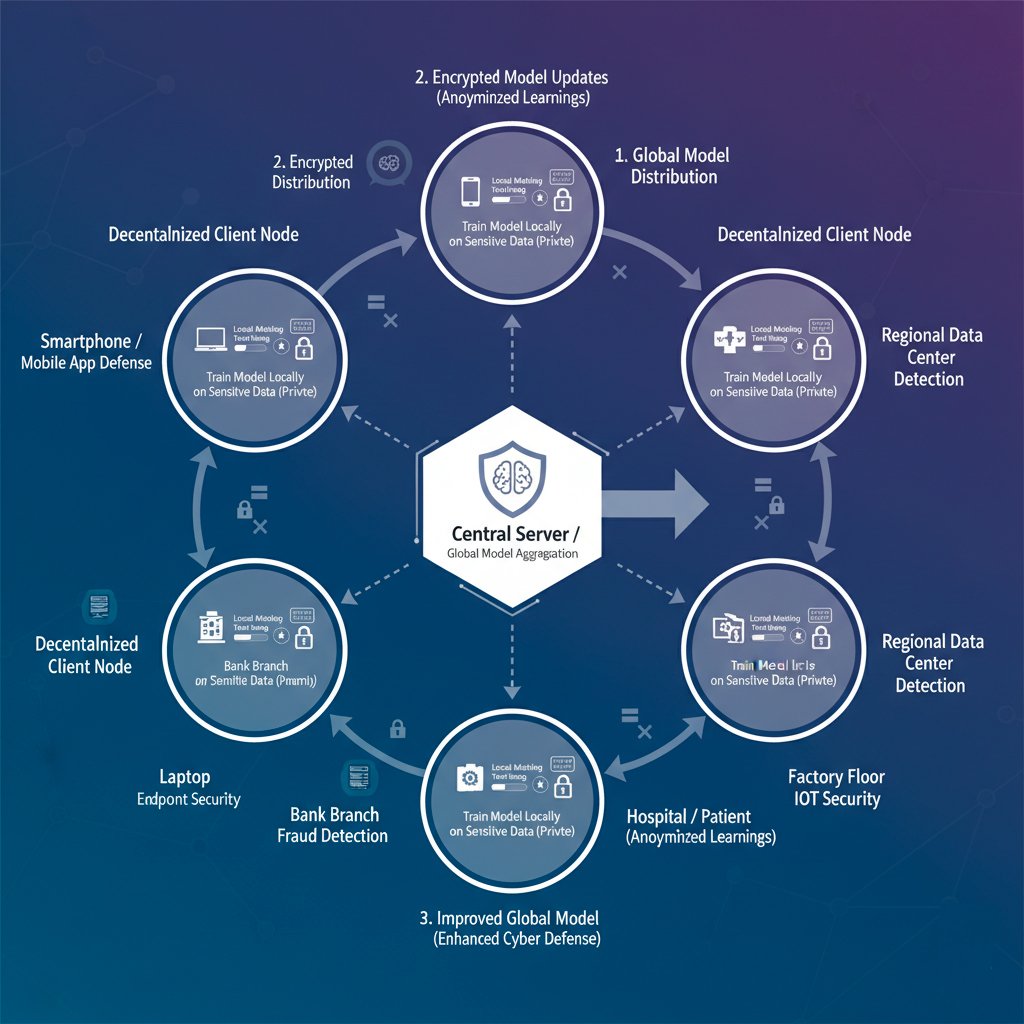

At its core, federated learning is a decentralized machine learning technique that trains an algorithm across multiple independent devices or servers without ever exchanging the raw data itself.

Instead of pooling sensitive information into a single, vulnerable data lake for analysis, the process is inverted:

-

A central server sends a base AI model to individual devices (or "nodes").

-

Each node trains the model on its own local data, creating an improved version.

-

The learnings from these local models—small, anonymized updates—are encrypted and sent back.

-

The central server aggregates these updates to create a smarter, more refined "global model," which is then distributed back to all nodes.

This privacy-preserving modeling approach ensures that sensitive data never leaves its source, offering a powerful solution to the classic privacy-versus-security dilemma.

Key Applications of Federated Learning in Cybersecurity

This innovative AI method is being actively applied across critical areas of cybersecurity to create more intelligent and adaptive defense mechanisms against threats like malware, ransomware, and phishing.

AI-Powered Intrusion Detection Systems (IDS)

Network traffic is highly sensitive and varies by region. An IDS built on a swarm intelligence model allows individual network nodes to train models on their local traffic to identify anomalies. These localized insights are then combined to build a global model that can recognize sophisticated, multi-stage attacks and zero-day threats that would be invisible to an isolated system.

Advanced Malware and Ransomware Analysis

To combat constantly evolving malware, security teams can use a distributed intelligence framework to collaboratively train detection models. Each participant trains a model on their local datasets of malicious and benign files. This allows them to build a powerful, up-to-the-minute classifier without ever sharing proprietary code or customer files—a crucial advantage for securing applications like mobile banking.

Next-Generation Fraud Prevention

Financial institutions are using decentralized analytics to detect complex fraud schemes. By training a fraud detection model on distributed transaction data, banks can identify subtle patterns indicative of money laundering or card-not-present fraud without sharing confidential customer financial records.

A Framework for Global Privacy Law Compliance: GDPR, CCPA, and More

A key driver for adopting federated learning is its inherent alignment with the core principles of major data protection regulations across the globe.

-

GDPR (European Union): It directly supports data minimization and purpose limitation, as raw data is never centrally collected.

-

CCPA/CPRA (California, USA): By not transferring raw personal data, it becomes much harder to argue that a "sale" or "sharing" of data has occurred, simplifying compliance.

-

LGPD (Brazil): It embodies the principles of necessity and data security, as the lack of a central database dramatically reduces the attack surface for a potential breach.

-

PIPEDA (Canada): It simplifies consent management by allowing data to be used for model improvement on-device without it ever being transferred.

-

PIPL (China): It provides a powerful solution to strict cross-border data transfer rules, as only anonymized model updates, not raw data, leave the country.

The Economic Benefits of Privacy-Preserving AI

Adopting a privacy-first AI methodology like federated learning provides substantial and tangible economic advantages for businesses.

Quantifiable Economic Benefits:

-

Reduced Cost of Data Breaches: With the average data breach costing millions, avoiding data centralization directly saves money in potential fines and remediation.

-

Lower Infrastructure Costs: The immense expense of building and maintaining a secure, compliant central data lake for AI training is eliminated.

-

Increased Operational Efficiency: More accurate models mean security analysts waste less time on false positives and fewer real attacks are missed.

Real-World Threats Avoided:

-

Zero-Day Exploits: The system excels at identifying the unusual patterns of new attacks that have no known signature.

-

Sophisticated Phishing Campaigns: Faint signals from across a global network can be aggregated to identify and shut down large-scale phishing attacks.

The Reality of Implementation: A Look at Sherpa.ai

While the benefits are clear, implementation requires investment. Let's consider a leading platform in this space, Sherpa.ai, as an example.

Implementation Time

A realistic implementation timeline for a single cybersecurity application ranges from 2 to 5 months, covering planning, infrastructure setup, integration, and initial model training.

Implementation Costs

Enterprise platforms do not publish pricing, but a business should budget for:

-

Platform Licensing Fee: An annual fee likely ranging from $25,000 to over $1,000,000.

-

Professional Services & Integration: A significant one-time cost, potentially $5,000 to $500,000+.

-

Internal Costs & Ongoing Maintenance: Costs for internal staff and annual support fees.

A large-scale deployment can represent an initial investment in the mid-six to low-seven figures.

Frequently Asked Questions (FAQ)

1. How is federated learning different from traditional machine learning? The key difference is where the data is processed. Traditional AI requires centralizing data for training. Federated learning reverses that; it sends the model to the data's location. This fundamentally enhances privacy and security.

2. Who owns the data and the final AI model? Data ownership remains with the original source. The user or organization never relinquishes control of their data. The final global model, which contains aggregated mathematical patterns but no raw data, is typically owned by the entity orchestrating the network.

3. Is federated learning secure against attacks? While no system is completely immune, this decentralized architecture is highly resilient. Security measures like secure aggregation and differential privacy are used to encrypt and anonymize model updates, making it extremely difficult to reverse-engineer anyone's data or poison the model.

4. What industries can benefit from federated learning? Any industry handling sensitive, distributed data can benefit. This includes healthcare (training on patient data from different hospitals), telecommunications (detecting network faults), and industrial manufacturing (predicting machine failures).

5. What is the role of the central server if it never sees the data? The central server acts as an orchestrator. It distributes the base model, securely receives and aggregates the encrypted model updates, builds the improved global model, and distributes it back to the participants. It manages the learning process without ever accessing the underlying sensitive data.